Get started

An overview of the TurboStarter AI starter kit.

TurboStarter AI is a starter kit with ready-to-use demo apps that helps you quickly build powerful AI applications without starting from scratch. Whether you're launching a small side project or a full-scale enterprise solution, it provides the structure you need to jump right into building your own unique AI application.

Features

TurboStarter AI comes packed with features designed to accelerate your development process:

Core framework

Monorepo setup

Powered by Turborepo for efficient code sharing and dependency management across web and mobile applications.

Next.js web app

Leverages Next.js for server-side rendering, static site generation, and API routes.

Hono API

Ultra-fast API framework optimized for edge computing with comprehensive TypeScript support.

React Native + Expo

Foundation for cross-platform mobile apps that share business logic with your web application.

AI

Vercel AI SDK

Complete toolkit for implementing advanced AI features like streaming responses and interactive chat interfaces.

LangChain

Powerful framework for building sophisticated AI applications with prompt management, memory systems, and agent capabilities.

Multiple AI providers

Seamless integration with OpenAI, Anthropic, Google AI, xAI, Meta, Deepseek, Replicate, Eleven Labs, and more through a unified interface.

Specialized models

Full support for text generation, structured output, image creation, voice synthesis, and embedding models.

One-line model switching

Effortlessly switch between AI models or providers with minimal code changes.

Data storage

Drizzle ORM

Type-safe ORM for efficient interaction with PostgreSQL (default) or other supported databases (MySQL, SQLite).

PostgreSQL database

Reliable storage for chat history, user data, and vector embeddings with optimized performance.

Vector embeddings

Built-in support for storing and retrieving vector embeddings for advanced retrieval-augmented generation.

Blob storage

Integrated S3-compatible storage for managing user uploads, AI-generated content, and documents.

Authentication

Better Auth integration

Secure authentication system starting with anonymous sessions, extensible to email/password, magic links, and OAuth providers.

Rate limiting

Intelligent protection for API endpoints against abuse and overuse.

Credits-based access

Flexible system to manage and control AI feature usage with customizable credit allocation.

Backend API key management

Security-first approach ensuring sensitive API keys remain protected on the server side.

User interface

Tailwind CSS & shadcn/ui

Utility-first CSS framework and pre-designed components for rapid UI development.

Base UI

Accessible, unstyled components that provide the foundation for beautiful, functional interfaces.

Shared UI package

Centralized UI component library ensuring consistency across all applications in the monorepo.

Demo apps

TurboStarter AI includes several production-ready demo applications that showcase diverse AI capabilities. Use these examples to understand implementation patterns and jumpstart your own projects.

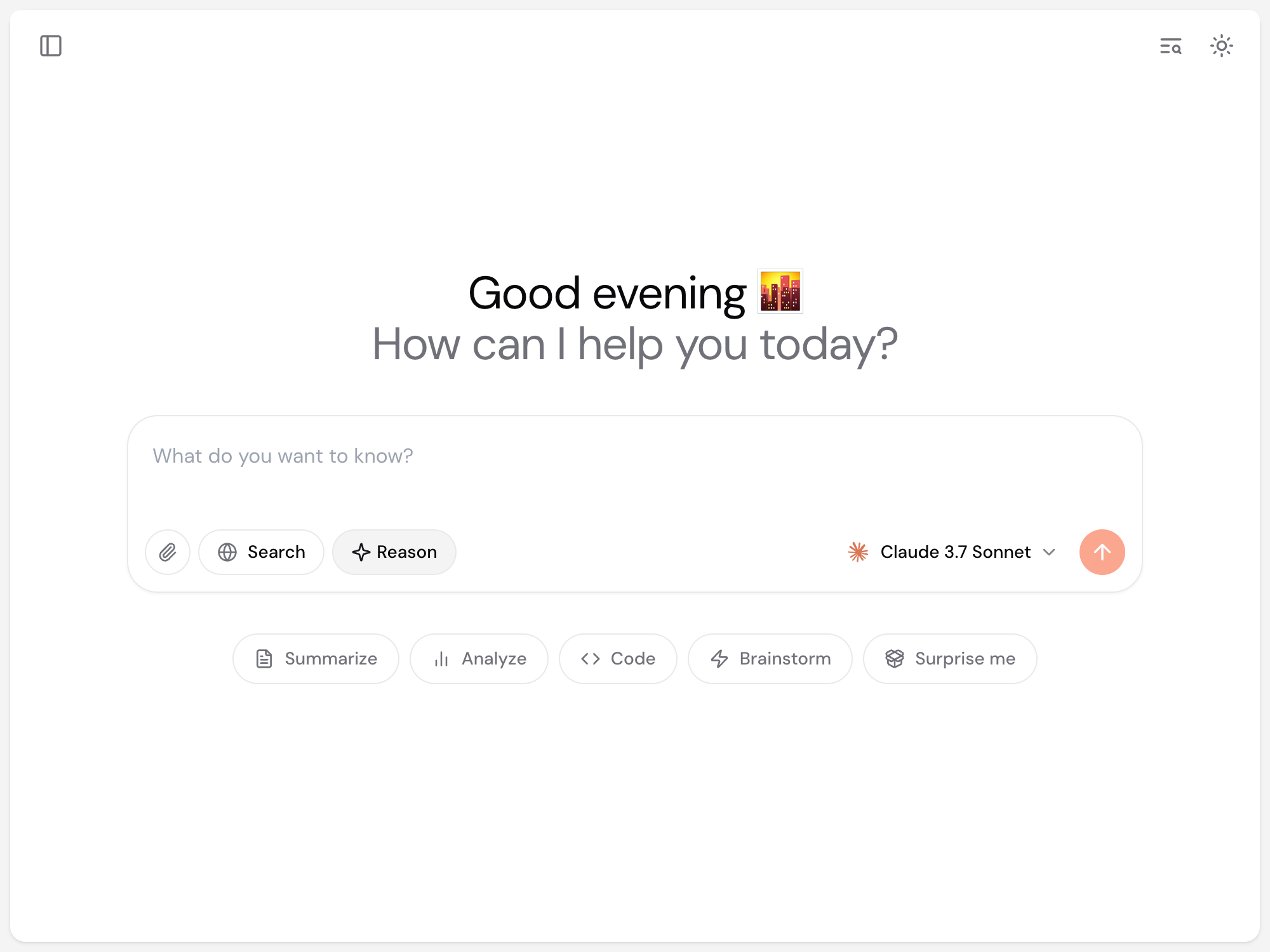

Chatbot

Build intelligent conversational experiences with an AI chatbot featuring contextual reasoning and real-time web search capabilities.

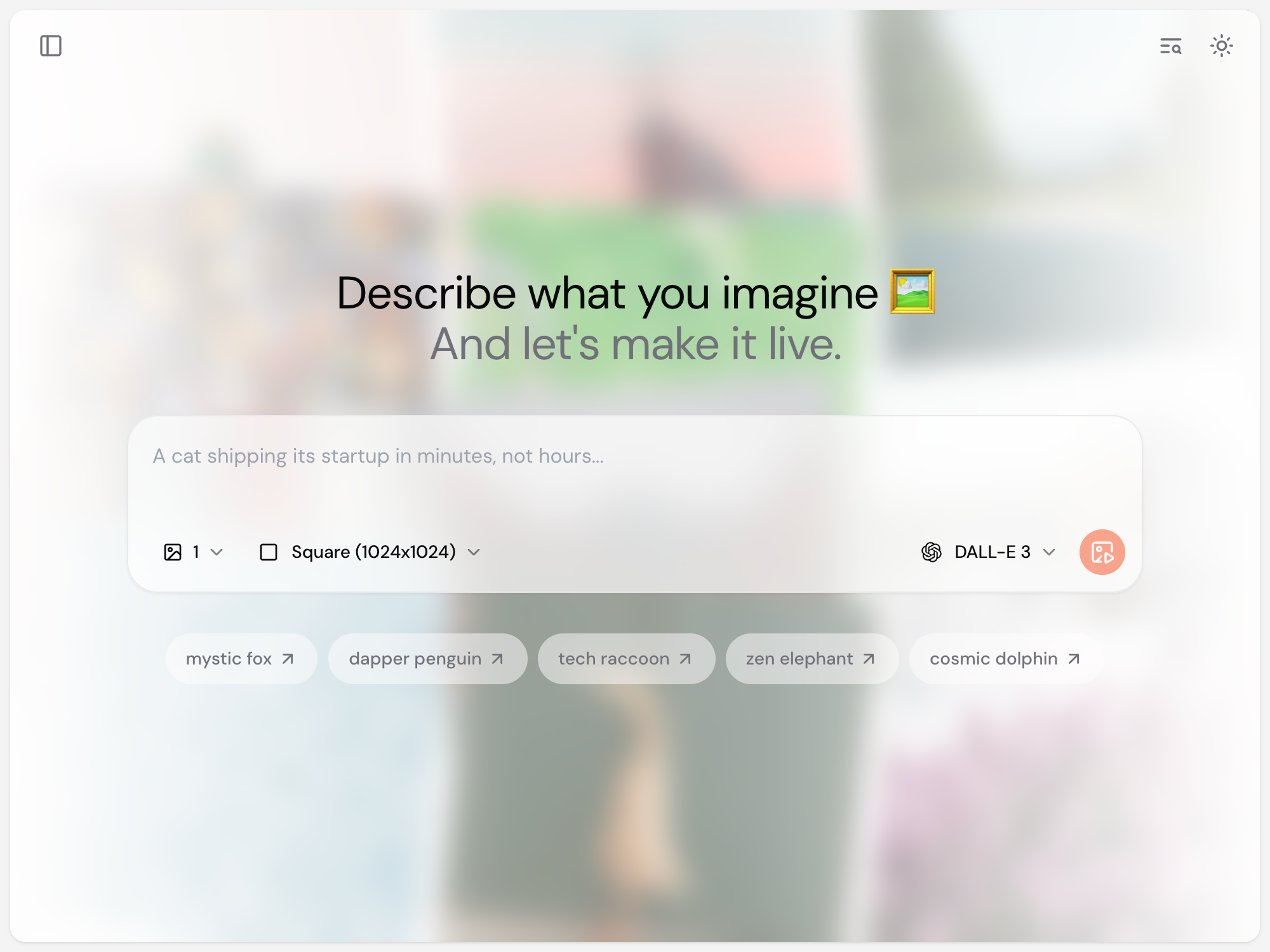

Image generation

Create compelling visuals with a versatile AI image generator supporting multiple models, styles, and output resolutions.

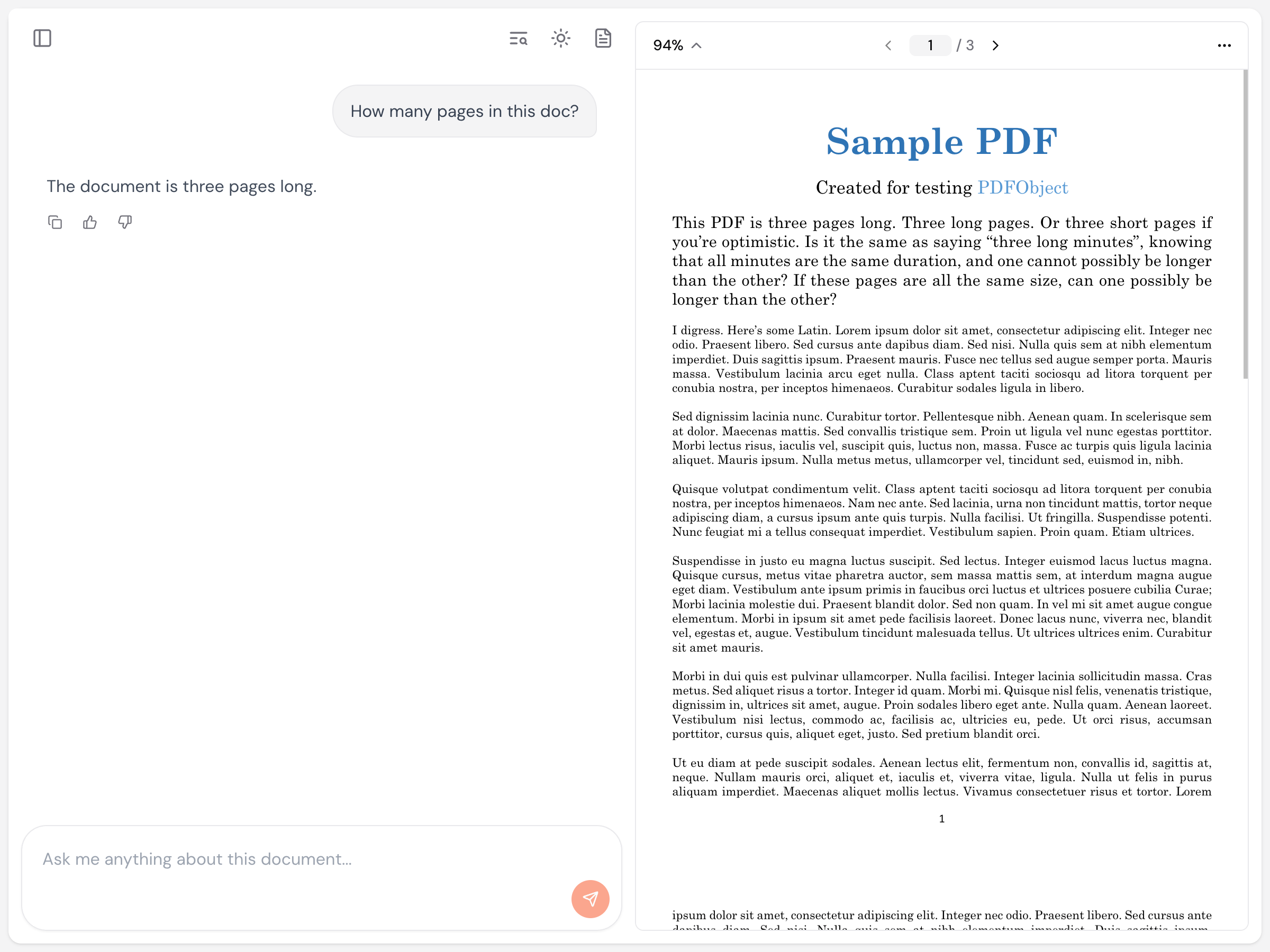

Chat with PDF

Extract valuable insights from documents by having natural conversations with your PDFs using context-aware AI.

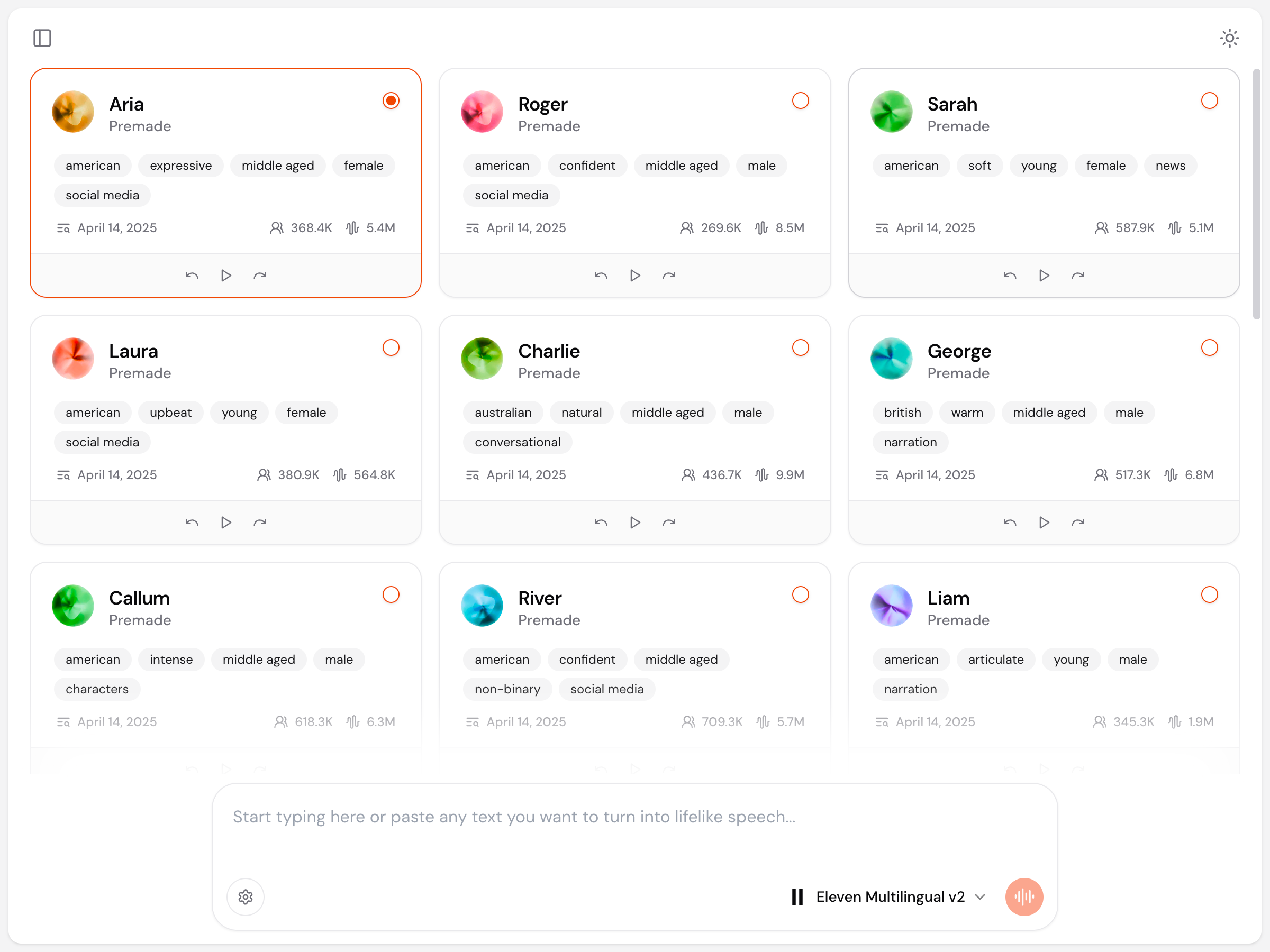

Text to speech

Convert written content into lifelike speech with support for over 5,000+ voices across multiple languages and styles.

Agents

Develop autonomous AI agents capable of executing complex tasks by orchestrating multiple AI models and tools.

Scope of this documentation

This documentation focuses specifically on the AI features, architecture, and demo applications included in the TurboStarter AI kit. While we provide comprehensive coverage of AI integrations, for information about core framework elements (authentication, billing, etc.), please refer to the Core documentation.

Our goal is to guide you through setting up, customizing, and deploying the AI starter kit efficiently. Where relevant, we include links to official documentation for the integrated AI providers and libraries.

Setup

Getting started with TurboStarter AI requires configuring the core applications first. For detailed setup instructions, refer to:

Web app setup

Follow our step-by-step guide in the Core web documentation to set up your web application.

Mobile app setup

Use our detailed guide in the Core mobile documentation to configure your mobile application.

After establishing the core applications, you can configure specific AI providers and demo applications using the dedicated sections in this documentation (see sidebar). For a quick start, you might also want to check our TurboStarter CLI guide to bootstrap your project in seconds.

When working with the AI starter kit, remember to use the ai repository instead of core for Git commands. For example, use git clone turbostarter/ai rather than git clone turbostarter/core.

Deployment

Deploying TurboStarter AI follows the same process as deploying the core web application. Ensure you configure all necessary environment variables, including those for your selected AI providers (like OpenAI, Anthropic, etc.), in your deployment environment.

For comprehensive deployment instructions across various platforms, consult our core deployment guides:

Deployment checklist

General checklist before deploying the web app.

Vercel

Streamlined deployment process for Vercel hosting.

Railway

Step-by-step guide for deploying to Railway.

Docker

Container-based deployment using Docker.

Other Providers

Additional guides for Netlify, Render, AWS Amplify, Fly.io and more.

For mobile app store deployment, refer to our mobile publishing guides:

Publishing checklist

Comprehensive pre-publishing verification for mobile applications.

Updates

Best practices for managing updates to published mobile apps.

Each AI demo app may have specific deployment considerations, so check their dedicated documentation sections for additional guidance.

llms.txt

Access the complete TurboStarter documentation in Markdown format at /llms.txt. This file contains all documentation in an LLM-friendly format, enabling you to ask questions about TurboStarter using the most current information.

Example usage

To query an LLM about TurboStarter:

- Copy the documentation contents from /llms.txt

- Use this prompt format with your preferred LLM:

Documentation:

{paste documentation here}

---

Based on the above documentation, answer the following:

{your question}Let's build amazing AI!

We're excited to help you create innovative AI-powered applications quickly and efficiently. If you have questions, encounter issues, or want to showcase your creations, connect with our community:

Happy building! 🚀

How is this guide?

Last updated on